Patroni is an advanced, open-source cluster management framework that brings true high availability to PostgreSQL environments. It was originally developed by Zalando to address the complexity of managing distributed database clusters and to ensure seamless failover, replication, and data consistency across multiple nodes. Over time, Patroni has evolved into one of the most trusted and widely adopted tools for building resilient PostgreSQL infrastructures in both on-premise and cloud-native ecosystems.

Unlike traditional replication setups that rely on manual failover procedures or custom scripts, Patroni introduces an intelligent orchestration layer that automates the entire process of cluster coordination. It continuously monitors the health of all nodes, identifies failures in real time, and promotes standby replicas to primary roles when necessary — all without human intervention. This automation minimizes downtime, preserves data integrity, and ensures uninterrupted service even during unexpected outages or maintenance operations.

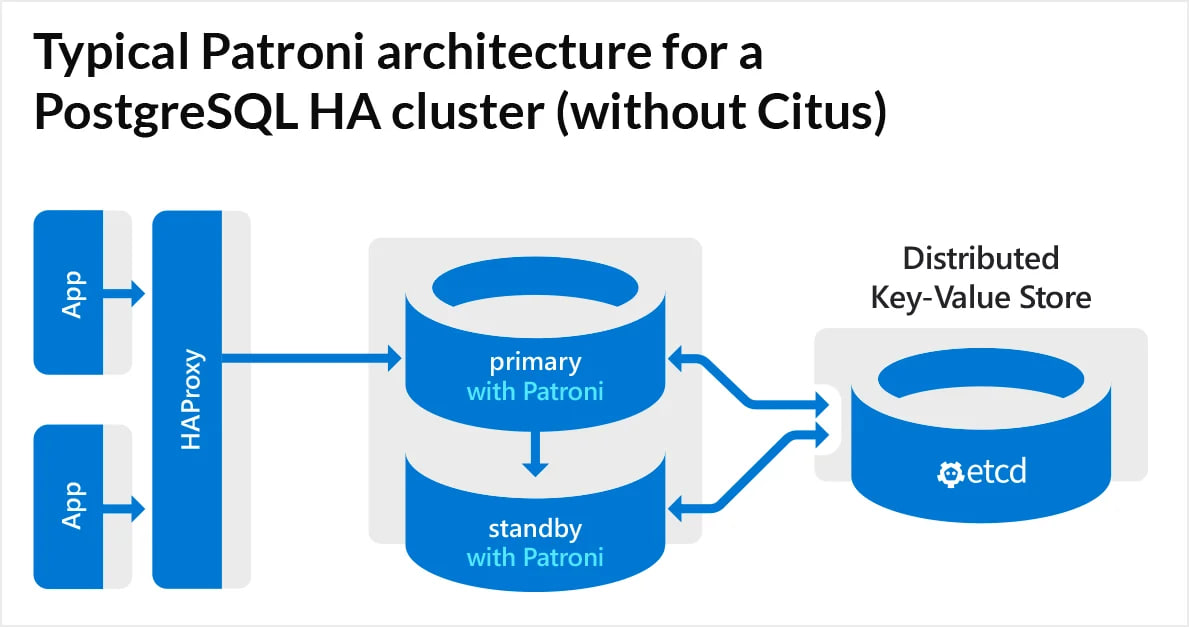

Patroni is designed with flexibility and extensibility in mind. It integrates seamlessly with distributed configuration systems such as Etcd, Consul, or ZooKeeper, which act as the backbone for leader election and cluster state synchronization. Its built-in REST API enables external tools and monitoring systems to easily interact with the cluster, while support for HAProxy and PgBouncer ensures efficient load balancing and connection routing.

Thanks to its reliability, transparency, and strong community support, Patroni has become the de-facto standard for achieving high availability PostgreSQL deployments. Whether you’re managing mission-critical databases in enterprise infrastructure or scaling workloads in containerized environments like Kubernetes, Patroni offers the tools and automation you need to keep your systems consistent, fault-tolerant, and always online.

How a Patroni Cluster Works

A Patroni cluster consists of several components that interact with each other:

- Primary (master) – the main PostgreSQL server that handles write requests.

- Replica (standby) – replica(s) that receive data via streaming replication.

- DCS (Distributed Configuration Store) – an external configuration and cluster state store (e.g., Etcd, Consul, or ZooKeeper).

- Patroni agents – processes running on each node that manage PostgreSQL and coordinate their actions via the DCS.

Each node in the cluster runs its own Patroni daemon, which:

- Monitors the state of PostgreSQL.

- Queries the DCS for the current leader (primary).

- Can promote itself to primary if the current leader becomes unavailable.

How Patroni Operates

Patroni’s operation is based on the concept of leader election through the DCS.

- One node obtains “leadership” — it becomes the primary.

- Other nodes synchronize their data with the primary using PostgreSQL’s built-in replication.

- If the leader fails (e.g., the server becomes unavailable or unresponsive), Patroni performs an automatic failover:

- A new leader is selected from the available replicas.

- The cluster updates its information in the DCS.

- Applications redirect requests to the new primary (via HAProxy, PgBouncer, or other middleware).

This ensures minimal downtime and continuous database availability.

Patroni Features and Benefits

- Automatic failover Role switching between nodes happens automatically upon failure, without administrator intervention.

- Integration with external systems Patroni supports DCS (Etcd, Consul, ZooKeeper) for coordinating cluster state.

- Flexible replication configuration You can use either synchronous or asynchronous PostgreSQL replication.

- REST API Each node exposes an API for management, monitoring, and integration with external tools.

- External load balancer support Patroni easily integrates with HAProxy or PgBouncer to route client connections to the current primary.

Example of a Patroni Topology

A simple Patroni cluster setup might look like this:

+------------------+

| HAProxy |

| (balancer) |

+--------+---------+

|

+---------+---------+

| |

+------+-----+ +-------+------+

| PostgreSQL | | PostgreSQL |

| (Primary) | | (Replica) |

+------+-----+ +-------+------+

| |

+----+----+ +----+----+

| Patroni | | Patroni |

+----+----+ +----+----+

| |

+---------+---------+

|

+------+------+

| Etcd / |

| Consul |

+-------------+Installing and Configuring Patroni

Patroni can be installed via a package manager or from source:

pip install patroni[etcd]Minimal configuration example (/etc/patroni.yml):

scope: pg_cluster

name: db-node-01

restapi:

listen: 0.0.0.0:8010

connect_address: 10.0.0.11:8010

etcd:

host: 10.0.0.100:2380

postgresql:

listen: 0.0.0.0:5433

connect_address: 10.0.0.11:5433

data_dir: /var/lib/postgresql/15/data

authentication:

replication:

username: repl_user

password: repl_secret

superuser:

username: pg_admin

password: super_secure

parameters:

max_connections: 150

shared_buffers: 512MB

wal_level: replica

hot_standby: on

Once all nodes are configured and Patroni is started, the coordination and replication process begins automatically.

Conclusion: Practical Tips

Patroni is more than just a layer on top of PostgreSQL — it’s a full lifecycle management system for database clusters. It combines replication, automatic failover, and dynamic configuration, making it essential for production environments where downtime is unacceptable.

From a technical perspective, Patroni acts as a “coordinator” between PostgreSQL nodes, synchronizing their state via a distributed store (Etcd, Consul, or ZooKeeper). This allows it to:

- Monitor node health – each replica periodically reports its status to the DCS (Distributed Configuration Store);

- Elect a leader – if the primary node fails, a new one is automatically promoted without manual intervention;

- Update cluster configuration without downtime – changes are applied dynamically via Patroni’s REST API;

- Integrate with orchestration systems – for example, the Patroni Kubernetes Operator enables running highly available PostgreSQL in containerized environments;

- Fine-tune replica synchronization – choose between synchronous_mode and asynchronous_mode depending on data SLA requirements.

For better production resilience, it’s recommended to:

- Deploy at least three PostgreSQL nodes and three DCS nodes (e.g., Etcd);

- Set up HAProxy or PgBouncer for routing client connections;

- Perform regular failover tests and back up WAL files;

- Monitor via Prometheus Exporter, included with Patroni.

Patroni works seamlessly with tools like pgBackRest, Grafana, Ansible, and Kubernetes, forming a reliable foundation for modern cloud infrastructures.

FAQ

- Can Patroni be used without Etcd or Consul? No. Patroni requires a DCS (such as Etcd, ZooKeeper, or Consul) to store metadata and perform leader elections.

- Is a separate server required for the DCS? Yes, the DCS should be deployed independently from PostgreSQL nodes to avoid a single point of failure.

- Does Patroni support synchronous replication? Yes, you can configure synchronous replicas by setting synchronous_mode: true.

- How can I monitor the cluster? Through the REST API (/patroni) or external tools such as Prometheus + Grafana.

- Can Patroni run in Docker? Yes, containerized environments are officially supported — there are ready-made images and a Helm Chart for Kubernetes.

Serverspace Knowledge Base

At the Serverspace Knowledge Base, you’ll discover a rich collection of educational materials designed to help engineers, administrators, and developers master modern cloud and DevOps technologies. Beyond articles on PostgreSQL and Patroni, our library includes detailed step-by-step guides on deploying and managing Kubernetes clusters — from initial setup to advanced orchestration scenarios. You can learn how to configure Namespaces, Pods, and StatefulSets, optimize resource allocation, and implement secure and automated CI/CD pipelines using popular tools like ArgoCD and GitLab CI.

For users looking to improve system resilience, we also provide articles on integrating Prometheus and Grafana for observability and monitoring, configuring Alertmanager, and building dashboards that give real-time visibility into cluster performance. If your focus is on application delivery, you’ll find practical materials on Docker image optimization, using Helm for version-controlled deployments, and implementing service discovery with Consul and Istio.

Our Knowledge Base covers a wide range of topics related to infrastructure management and automation: from setting up Terraform scripts for multi-cloud environments to managing Ansible playbooks for scalable server provisioning. Every article is written with an emphasis on practical application, providing configuration examples, code snippets, and best practices that reflect real-world production scenarios.

Whether you are just beginning your journey into containerized environments or already running complex Kubernetes clusters in production, the Serverspace Knowledge Base offers valuable insights to guide your development. By exploring our regularly updated materials, you’ll stay informed about the latest technologies, cloud architecture patterns, and DevOps methodologies — helping you build faster, safer, and more reliable systems in the cloud.