In the modern DevOps era, automating Linux systems becomes critically important for ensuring the reliability, scalability, and security of IT infrastructure. This article presents a comprehensive approach to advanced automation on Linux virtual machines in the Serverspace cloud, covering five key areas: modern job scheduling methods transitioning from cron to systemd-timer, cloud resource management via API, containerization with Docker Swarm, kernel-level monitoring using eBPF, and robust backups to S3-compatible storage. Each section includes detailed step-by-step instructions, ready-to-use scripts, and practical recommendations based on the latest DevOps best practices.

Task Scheduling Automation

Transition from cron to systemd-timer: a modern scheduling approach

Traditional cron, despite its widespread use, has a number of limitations in modern Linux systems. Systemd-timer offers a more advanced alternative with improved logging, flexible scheduling capabilities, and native integration with the systemd ecosystem.

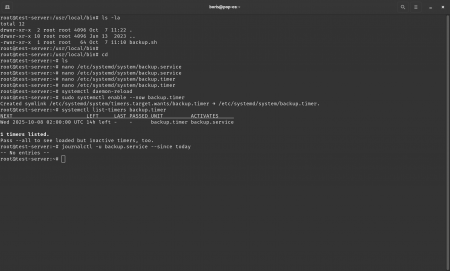

How-To: Convert a cron job into a systemd timer

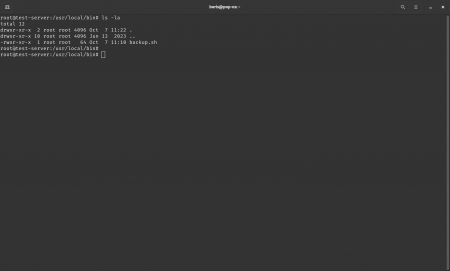

Step 1. Create the task script

#!/usr/bin/env bash

rsync -a /var/www/ /backup/www-$(date +%F)/Set permissions:

chmod 755 /usr/local/bin/backup.sh

Step 2. Create the service unit file

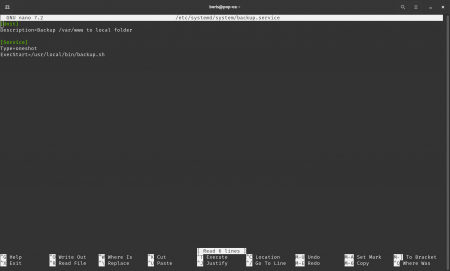

[Unit]

Description=Backup /var/www to local folder

[Service]

Type=oneshot

ExecStart=/usr/local/bin/backup.sh

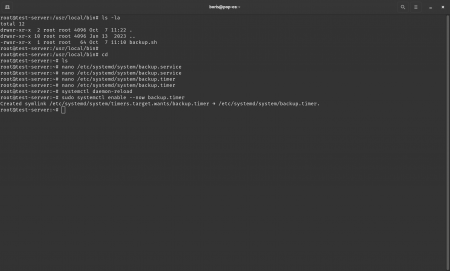

Step 3. Create the timer unit file

[Unit]

Description=Run backup daily at 2 AM

[Timer]

OnCalendar=*-*-* 02:00:00

Persistent=true

[Install]

WantedBy=timers.target

Step 4. Enable the service and timer

sudo systemctl daemon-reload

sudo systemctl enable --now backup.timer

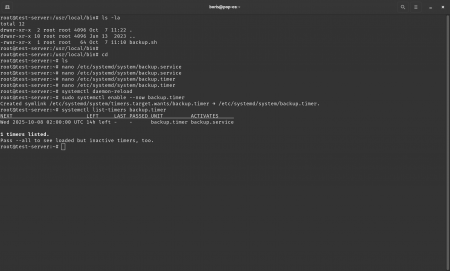

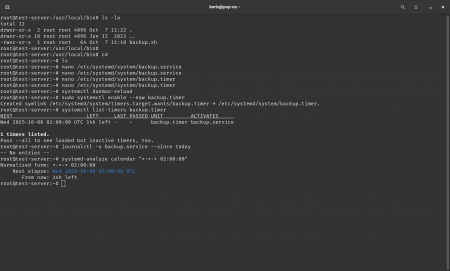

Step 5. Verify operation

systemctl list-timers backup.timer

journalctl -u backup.service --since today

Key advantages of systemd-timer include automatic logging into journalctl, catch-up of missed events, and declarative dependency management. Modern system administrators increasingly adopt systemd-timer for critical tasks due to its reliability and integration with system services.

Verify schedule syntax using the built-in tool:

systemd-analyze calendar "*-*-* 02:00:00"

systemd-timer FAQ

Q: Why doesn’t the timer fire exactly at 02:00?

A: Possible causes include a full systemd journal or unsynchronized system time. Ensure correct NTP configuration and use the Persistent=true option. systemd may also add a random delay to prevent simultaneous execution of many timers.

Q: How to migrate complex cron jobs?

A: For complex scripts, create separate service unit files with dependency directives such as After=, Requires=, or Wants= to ensure correct execution order.

Technical Details: systemd-timer

- Debugging:

systemctl status <timer>.timer,systemctl list-timers. - Schedule testing:

systemd-analyze calendar. - Stagger concurrent runs:

RandomizedDelaySec=30.

Cloud Management Scripts

API automation in the Serverspace ecosystem

Serverspace provides a powerful RESTful API for programmatic control of cloud resources. The API supports standard HTTP methods (GET, POST, PUT, DELETE) and uses JSON for data exchange, enabling automated creation, configuration, and deletion of virtual machines without manual intervention.

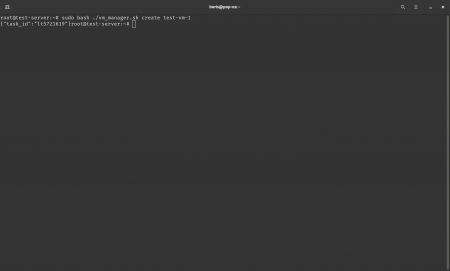

How-To: Auto-create and delete VMs via API

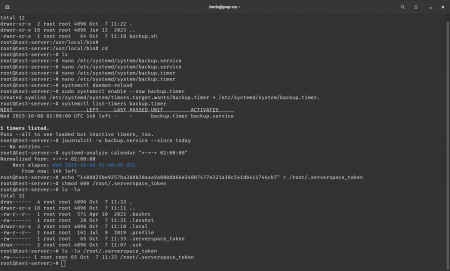

Step 1. Securely store your token

echo "YOUR_TOKEN" > /root/.serverspace_token

chmod 600 /root/.serverspace_token

Step 2. Manager script

#!/usr/bin/env bash

set -euo pipefail

TOKEN=$(< /root/.serverspace_token)

ACTION=${1:-}

NAME=${2:-}

if [[ -z $ACTION || -z $NAME ]]; then

echo "Usage: $0 {create|delete} <vm-name>"

exit 1

fi

API="https://api.serverspace.io/api/v1"

if [[ $ACTION == "create" ]]; then

curl -X POST \

"$API/servers" \

-H "content-type: application/json" \

-H "x-api-key: $TOKEN" \

-d '{

"location_id": "am2",

"cpu": 1,

"ram_mb": 2048,

"image_id": "Debian-12-X64",

"name": "'"$NAME"'",

"networks": [{"bandwidth_mbps": 50}],

"volumes": [{"name": "boot", "size_mb": 51200}],

"ssh_key_ids": ['Your ID SSH-key']

}'

elif [[ $ACTION == "delete" ]]; then

VM_ID=$(curl -s "$API/servers?name=$NAME" -H "x-api-key: $TOKEN" | jq -r '.servers[^0].id // empty')

if [[ -z "$VM_ID" ]]; then

echo "VM with name '$NAME' not found"

exit 1

fi

curl -X DELETE "$API/servers/$VM_ID" -H "x-api-key: $TOKEN"

fi

Step 3. Schedule periodic execution

0 6 * * * /usr/local/bin/vm_manager.sh create auto-vm-$(date +\%Y\%m\%d) >> /var/log/vm_manager.log 2>&1

Cloud API FAQ

Q: How to store the token securely?

A: Use a file with 600 permissions, avoid source control. Read it using $(< file).

Technical Details: Serverspace API

- Error handling: check HTTP status codes, parse JSON with

jq. - Pagination: follow the

nextfield. - Rate limiting: implement exponential backoff.

Containerization and Networking

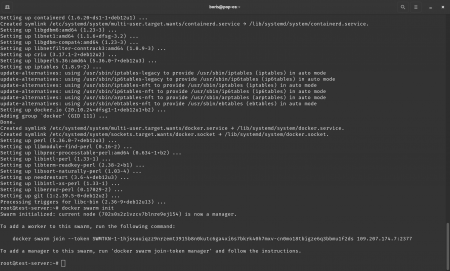

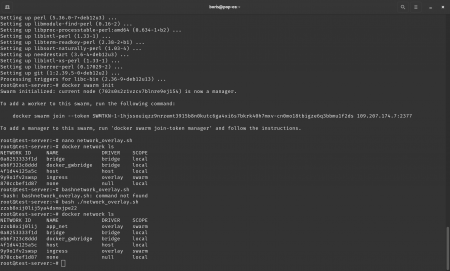

Docker Swarm and overlay networks

Docker Swarm offers built-in container orchestration with support for overlay networks. Overlay networks create a virtual network layer across physical hosts using VXLAN for traffic encapsulation.

How-To: Automated overlay network deployment

Step 1. Initialize Swarm

docker swarm init

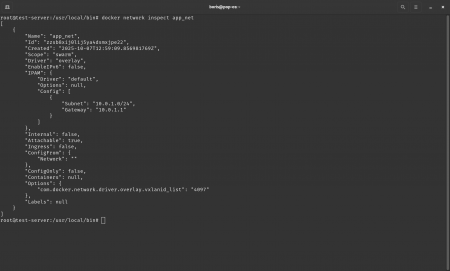

Step 2. Network creation script

#!/usr/bin/env bash

docker network create -d overlay --attachable app_net || echo "Network exists"

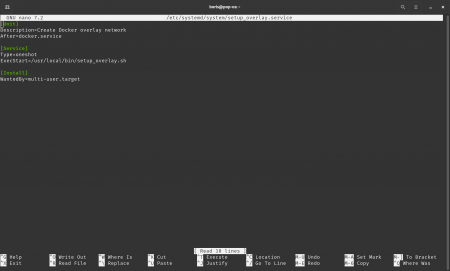

Step 3. Systemd unit file

[Unit]

Description=Create Docker overlay network

After=docker.service

[Service]

Type=oneshot

ExecStart=/usr/local/bin/setup_overlay.sh

[Install]

WantedBy=multi-user.target

Step 4. Enable the unit

sudo systemctl daemon-reload

sudo systemctl enable --now setup_overlay.service

Swarm FAQ

Q: Why doesn’t the overlay network appear on worker nodes?

A: Ensure TCP ports 2377, 7946 and UDP ports 7946, 4789 are open and all nodes are in the same Swarm.

Technical Details: Swarm overlay

- Diagnostics:

docker node ls,docker network inspect,ip -d link show. - Docker logs:

journalctl -u docker.service --since today. - Overlay encryption:

--opt encrypted(IPsec for VXLAN).

Kernel-Level Monitoring with eBPF

eBPF: monitoring system calls

eBPF enables safe execution of programs within the kernel space for high-fidelity system call monitoring with minimal overhead.

How-To: Automated metrics collection

Step 1. Install dependencies

sudo apt install bpfcc-tools linux-headers-$(uname -r)Step 2. Collection script

#!/usr/bin/env bash

DURATION=60

LOGFILE="/var/log/execsnoop-$(date +%F_%H%M%S).log"

sudo execsnoop -t $DURATION > $LOGFILE

Step 3. Schedule periodic run

*/5 * * * * /usr/local/bin/monitor_execs.sh

eBPF FAQ

Q: How to manage log volume?

A: Use logrotate, e.g., in /etc/logrotate.d/execsnoop:

/var/log/execsnoop-*.log {

daily

rotate 7

compress

missingok

notifempty

}

Technical Details: eBPF

- Filtering:

execsnoop -u www-data. - Stack traces:

execsnoop --stack. - Log forwarding:

logger -t execsnoop < $LOGFILE.

Backup and Recovery

Incremental backup to S3-compatible storage

Step 1. Install AWS CLI and configure profile

sudo apt install awscli

aws configure --profile serverspace

Step 2. Backup script

#!/usr/bin/env bash

SRC="/var/www"

DEST="s3://my-serverspace-bucket/$(hostname)"

aws s3 sync "$SRC" "$DEST" \

--profile serverspace \

--storage-class STANDARD_IA \

--delete

Step 3. systemd timer

[Unit]

Description=Incremental backup to S3

[Service]

Type=oneshot

ExecStart=/usr/local/bin/incremental_backup.sh

[Unit]

Description=Run incremental backup hourly

[Timer]

OnCalendar=hourly

Persistent=true

[Install]

WantedBy=timers.target

Backup FAQ

Q: How to verify integrity?

A: Run aws s3 sync --dryrun and compare with aws s3 ls.

Technical Details: S3 backup

- Parallelism:

aws configure set default.s3.max_concurrent_requests 20. - Throttling: use

--no-progressand adjust concurrency. - Key rotation: regularly rotate access keys.

Transitioning from cron to systemd-timer delivers enhanced logging and reliability. API automation in Serverspace minimizes manual operations. Docker Swarm with overlay networks scales microservices. eBPF enables high-performance kernel monitoring. Incremental S3 backups save bandwidth and time. Integrating these technologies builds a robust, secure, and scalable DevOps ecosystem.

It is recommended to test all scripts on a Serverspace VM, adapt them to your infrastructure, and integrate them into your CI/CD pipelines. A final e-book checklist “Linux for DevOps on Serverspace” is planned, featuring all examples and best practices.