What is Kubernetes?

Application deployment processes have changed considerably in recent years and the practice of application containerisation has spread. It is a packing method for apps and their dependencies into isolated containers that can be deployed and executed in virtually any runtime environment. A popular container orchestration platform is Kubernetes, and it provides tools to automate the deployment, scaling and management of containerised applications, reducing the complexity and effort required for these operations.

K8S. What is it in simple terms

Kubernetes (k8s) is an open, extensible platform for automating the deployment, scaling and management of containerised applications developed by the Google team. It provides a set of features and tools to efficiently manage containers with apps in distributed environments.

K8s key benefits:

- Automated container deployment and management allows developers to efficiently manage a container cluster. It provides tools for launching, shutdown and monitoring containers, as well as for load management and load balancing between cluster nodes.

- Apps scalability horizontally and vertically. Automatically responds to load changes and can increase or decrease the number of container instances to ensure optimum performance and availability.

- Built-in self-healing is capable of detecting and repairing failed containers or nodes automatically, ensuring uninterrupted application operation.

- Using a declarative model, in which developers define a desired state of the system and Kubernetes independently aligns the system to that state. This simplifies the process of deploying and managing apps, ensuring consistency and predictability in the environment.

- Support for different environments. Kubernetes allows containers to be deployed on different platforms, including cloud providers (e.g. AWS, Google Cloud, Microsoft Azure) and its own on-premises servers. This provides flexibility and versatility in the choice of infrastructure.

K8S history

The history of Kubernetes began with Google's project to develop a system for managing scalable applications internally. In 2003, Google began using a system called Borg to manage its distributed applications and resources across the company. Borg provided automatic deployment, scaling, monitoring, and resource management.

In 2014, Google engineers decided to create an open and accessible version of a container management system based on the experience and principles of Borg. They began developing a project called Kubernetes. Google believed that containerisation and app orchestration were important parts of developing and managing modern apps, and decided to share their knowledge and tools with the wider developer community.

Google announced the first public version of Kubernetes in June 2014. The platform was released as open source and handed over to the Cloud Native Computing Foundation (CNCF), an organization that supports the development of cloud technologies and projects. Since its release, Kubernetes has received widespread support and attracted tremendous attention from the developer community and industry. It has become the de facto standard for managing containerised applications. And now it is one of the most actively developing projects in the CNCF.

Kubernetes continues to evolve today. It is attracting more and more companies and organizations who use it to manage their apps across different infrastructures. Kubernetes provides powerful tools for deploying, scaling and managing applications on various platforms, including cloud providers such as Serverspace and on-premises environments.

k8s has undergone significant changes and development in recent years, and is now an integral part of modern Devops and cloud application development. There were a lot of new versions with new features and improvements. Companies and organisations around the world are actively using Kubernetes to manage their container applications. The ecosystem around the platform is constantly growing.

Kubernetes architecture

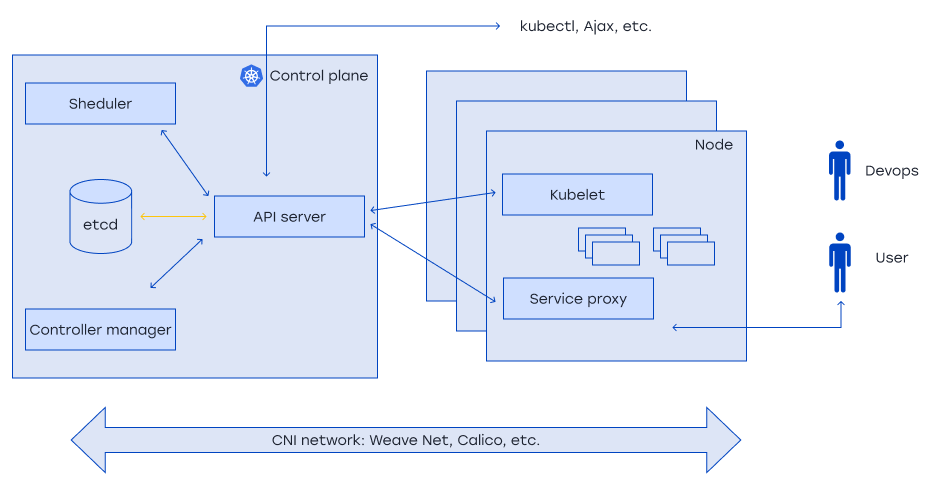

1. Master Node, or Control Plane Node. There most of the important tasks of managing and administering a k8s cluster are performed. It consists of four main components:

- The API Server provides an interface for communicating with the Kubernetes API.

- Controllers provide cluster management and monitoring, including Deployment Controller, Replication Controller and others.

- The Scheduler is responsible for scheduling and deploying pods to work nodes based on resource requirements and deployment policies.

- etcd is a distributed key-value repository used to store cluster configuration and state.

2. Nodes are used to refer to the physical or virtual machines on which the containers run and operate. Each node represents a Worker Node in a Kubernetes cluster. The node is the execution environment for the containers and provides resources and facilities for executing applications in the containers. The node runs a Kubernetes agent called Kubelet, which manages the lifecycle of containers and pods on this node.

Each node has its own computing resources (CPU, memory, storage) and network interfaces. Nodes are connected to Master Nodes via network to exchange information about cluster status and receive commands to place and manage containers.

- Kubelet is an agent installed on each worker node that manages and controls containers in pods located on this node;

- Kube-proxy provides network connectivity for pods, including proxying and load balancing;

- Container Runtime is responsible for running and managing containers, e.g. Docker, Containerd, CRI-O.

3. Pods are a basic and minimal deployable unit that brings one or more containers together and provides them with an isolated runtime environment. Pods are the basic building block for hosting and managing containers in Kubernetes.

4. Services: An abstraction that provides a persistent access point to a group of pods and load balancing between them.

5. Optional components:

- The Replication Controller provides running and managing multiple instances of pods to improve application resilience and scalability.

- Persistent Volumes allow applications to retain data in persistent storage even when restarting or moving pods.

- Configurations and secrets include ConfigMaps for storing configuration data and Secrets for securely storing sensitive information such as passwords or access keys.

- Ingress Controller provides management of incoming network traffic in the cluster, allowing configuration of routing and load balancing for services.

- Namespaces are used for logical partitioning and isolation of resources in the cluster, allowing the creation of virtual groups for applications and users.

- Kubernetes integrates with various monitoring and logging systems, such as Prometheus, Grafana, ELK Stack and others, to provide observability and analysis of cluster and application health.

Kubernetes tasks

The container management system performs a number of important tasks related to container management:

- Сontainer deployment and status management. Kubernetes provides the ability to start, shutdown and restart containers. This ensures that the required applications are running and kept up to date.

- Scaling applications. Kubernetes allows applications to be scaled by running multiple containers simultaneously on a large number of hosts. This ensures more efficient use of resources and improves system resilience.

- Load balancing. Kubernetes automatically balances the load between containers, distributing it evenly and ensuring optimal system performance. Using the Kubernetes API, Kubernetes groups containers logically, defines their pools and placement, which promotes efficient use of resources.

Kubernetes installation process

The Kubernetes service you can order in the Serverspace control panel by selecting the version, cluster location and configuring CPU, RAM and number of nodes. There you can also connect the Dashboard in the panel. But how to start working?

You can create and delete clusters, change their configuration, and add and remove nodes via our control panel. For other tasks, you will need command line tools specifically designed to work with clusters.

kubectl is a Kubernetes command utility. With it, you can run a variety of commands to interact with your Kubernetes clusters. Kubectl provides the ability to deploy applications, monitor and manage cluster resources, and view logs. For a full list of kubectl features, see the official documentation available on the Kubernetes website.

Let's look at the utility installation on Ubuntu. Firstly you need to use the following command:

sudo apt-get update && sudo apt-get install -y apt-transport-https

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee -a /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubectlTo connect to a Kubernetes cluster from the command line, you will need a configuration file containing authentication certificates and other connection information. Follow these steps to download the file to your computer or server and import it.

Download the configuration file to your computer or server:

export KUBECONFIG=pathtofileYou now need to connect to the cluster.

To check the connection to the cluster, get the cluster status information:

kubectl cluster-infoA successful connection will result in the address at which Control Plane is running:

Kubernetes control plane is running at https://XXX.XXX.XXX.XXX:YYYTo further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

If kubectl is not configured correctly or cannot connect to the Kubernetes cluster, the following message will appear in the console:

The connection to the server "xxx.xxx.xxx.xxx:yyy" was refused - did you specify the right host or port?

Use the following command to diagnose connection errors, which outputs detailed information:

kubectl cluster-info dumpYou can use the Kubernetes cheat sheet to work with kubectl and become familiar with the basic Kubernetes commands.